Artificial Intelligence (AI) is officially here to stay.

As you’ve most likely seen in recent months, artificial intelligence has been making some pretty big waves across mainstream media and social media channels with ChatGPT (built by the team at OpenAI) at the forefront of all the hype.

It’s no longer a case of if, when, or how. Artificial intelligence has arrived and we need to learn to embrace it for the better.

On the back of all this AI-related news, we’ve seen the pace of change accelerate with AI being integrated into software at every (reasonable) opportunity, and the Atlassian ecosystem has certainly not been immune from this.

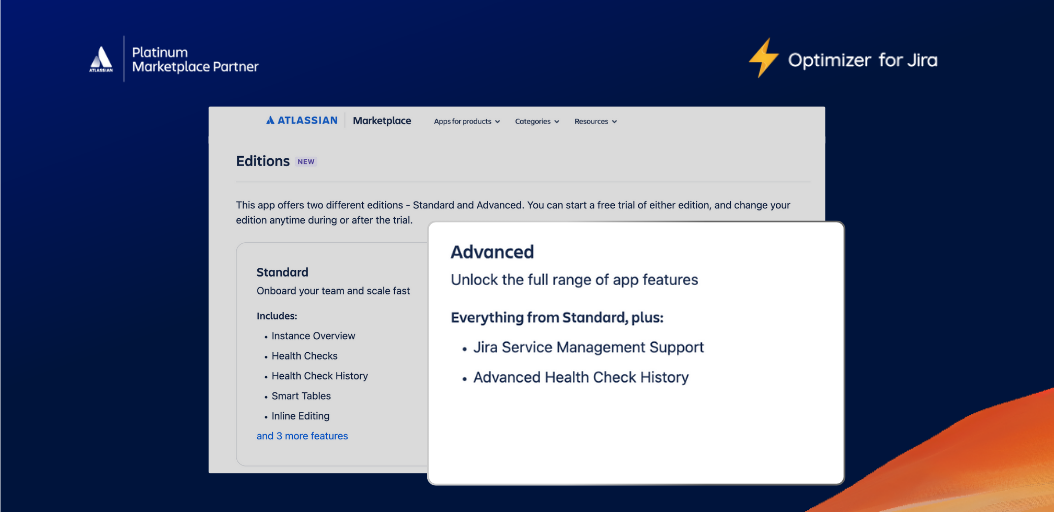

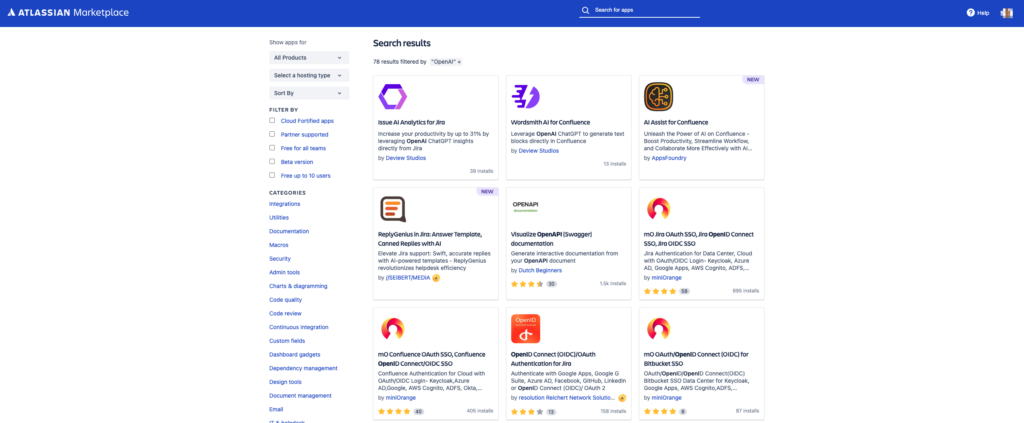

Last year Atlassian acquired Percept.ai to help enhance frontline support capabilities in Jira Service Management and deliver greater employee and customer experiences. The much-loved Atlassian Marketplace has been flooded with OpenAI or ChatGPT-related offerings and this is continuing at rapid speed.

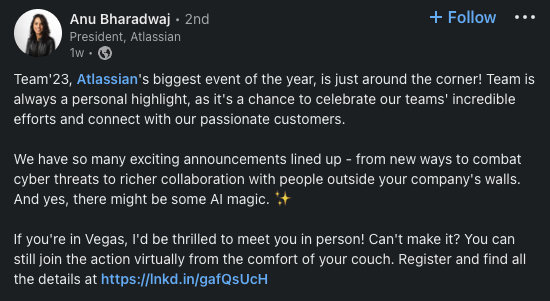

And finally, ahead of Team ‘23, Anu Bharadwaj (Atlassian’s President), has teased more AI-related updates and announcements at their flagship conference that kicks off in Vegas this week.

It’s our turn to harness OpenAI’s potential

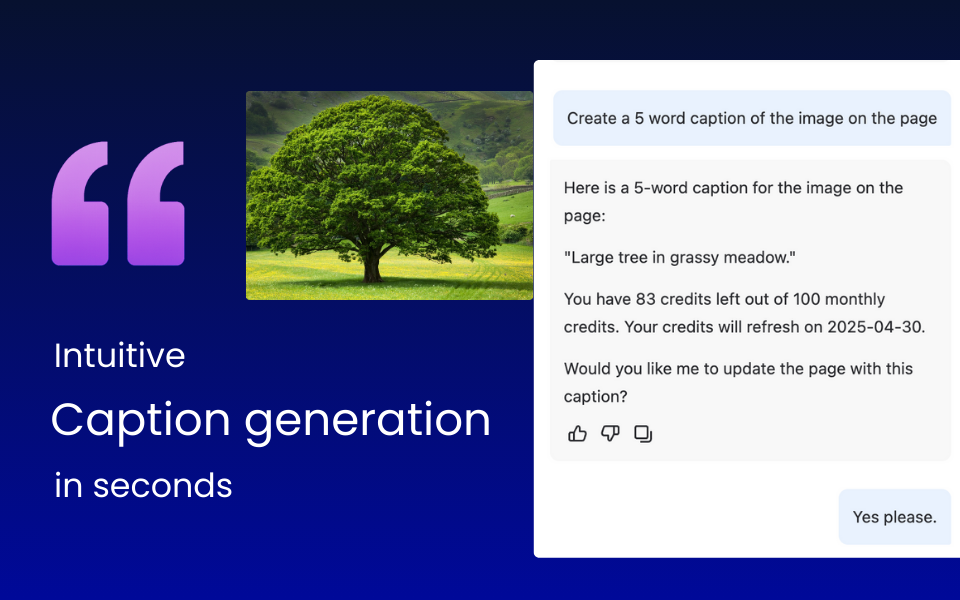

We saw a great opportunity to enhance the popular Compliance for Confluence app with the help of OpenAI and our engineers set off on this unique project several months ago.

The Compliance app was chosen as its primarily focused on maintaining data security and privacy in Confluence whilst identifying areas of risk or concern. Moderating the types of content contained in Confluence felt like a natural extension to help organizations ensure risks are identified so action could be taken. With the help of OpenAI, the content moderation task became far more manageable and we needed a solution that could learn over time.

And with that, the project was born.

But if we’re also a little honest, you know what they say; if you can’t beat them, join them!

Introducing content sentiment analysis and moderation to Confluence Cloud

With the power of OpenAI, we have developed a tool that will help you scan the content in Confluence pages to ensure it adheres to your organization’s appropriate use and ethical conduct policies.

The ultimate aim of this tool is to help your organization identify areas of concern or risk so that you can take action and ensure Confluence is being used in an appropriate way.

As Confluence is used by a wide range of organizations and industries, spanning both for-profit and non-profit sectors, there may be a greater risk for certain types of documents. You may also find accidental typos in pages that could be misinterpreted; this is where the content moderation tool can help identify these.

In full transparency, we sincerely hope the content moderation tool never has any concerning content to find in your Confluence instance. But it can be reassuring to have an extra tool in your armory that helps you assess the information stored and shared between teams and ultimately ensure it is appropriate.

What type of content can be detected?

There are a number of content types available for moderation using the OpenAI framework, including:

- Hate

- Threatening

- Self-harm

- Sexual

- Violence

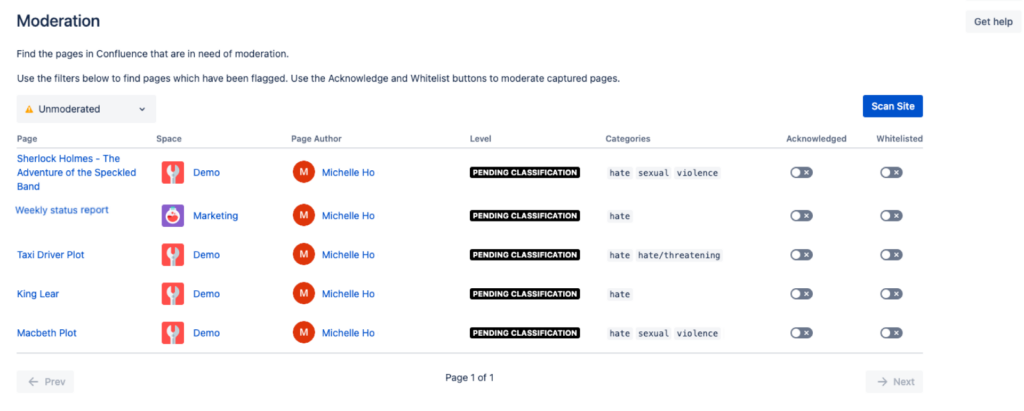

At present, the AI engine will identify these content types within individual pages and then flag it to users for moderation. There is a central search interface that admins can use to identify flagged content across the Confluence instance.

Get an exclusive demo at Team ’23

The content sentiment analysis and moderation tool is being showcased exclusively to attendees at Team ‘23 in Vegas, so if you’re there, be sure to stop by booth #77 for an exclusive demo with our team!

Our experiences working with the OpenAI framework

With all the hype about OpenAI, we wanted to share our experiences working with the framework and tool for those interested. We caught up with one of the software engineers working on this exciting project, who shared their thoughts;

“Our experiences with the OpenAI moderation tool has been both practical and instructive. The integration of the OpenAI framework into our platform was straightforward, and we anticipate that it will become a valuable component of our users’ content moderation strategies.

A noteworthy feature of the OpenAI moderation tool is its ability to intelligently process context and discern subtle distinctions that might not be immediately apparent. This level of sophistication has the potential to lead to more accurate content moderation, which in turn would benefit the teams relying on the tool for their platforms.

Additionally, the OpenAI moderation tool demonstrates adaptability, which we observed while providing the model with diverse examples. As we fed the model more examples, we witnessed it learning first-hand, becoming more accurate and efficient in filtering out inappropriate content. During the development phase, we decided to use Shakespeare’s Hamlet as a benchmark to evaluate the OpenAI moderation tool’s ability to moderate content. Hamlet is a classic play known for its intricate language and violent themes. We were impressed with how the moderation tool successfully identified these themes, demonstrating a deep understanding of the content and context. By recognising the violent undertones, the tool showcased its capacity to process complex and nuanced text, further validating its potential for effective content moderation across a wide range of materials.

OpenAI’s extensive documentation has been a useful resource throughout the project, offering detailed guidance on various aspects of the technology. The documentation has been crucial for understanding best practices and addressing any development issues, enabling the efficient implementation of the moderation tool”

It’s certainly been an interesting project and it was particularly cool to see OpenAI actively learning in front of our eyes when it was moderating these Shakespeare plays.

What does the future hold for this content moderation tool?

There are several limitations in the content moderation tool that we’d like to iron out, particularly the fact a user has to manually access the flagged results and check them proactively.

Based on the feedback we receive from users at Team ‘23, we’re planning to further enhance the content moderation tool and integrate it into Compliance for Confluence for all customers to use.

It’s definitely early days, but watch this space as we’re keen to gather feedback from our users and make further improvements to this feature!